Artificial Intelligence & Bias

Recently I attended a roundtable, quite interestingly we discussed “bias” coming out of AI-ML (Artificial Intelligence-Machine Learning) systems. Being an engineer working in this field left me pondering on some aspects, which I thought to share. There are some instances of AI-driven algorithms which seem to bring out the bias of various kinds like — gender, societal, ethnicity and so on.

One of the participants brought up a very interesting point that there is apparently an AI-driven bail program which decides who should be given bail and who shouldn’t. This could be the nature of this particular problem which generated false-positive results impacting the verdict. This could be a result of human bias while labeling the data for a given problem. Are people responsible to label such kind of data, fully aware of the law? Does their personal thought process impact the result? This is still an unanswered question. It is definitely a process which will improve in the future. AI generated legal documents are apparently more efficient, fast and accurate than generated by lawyers.

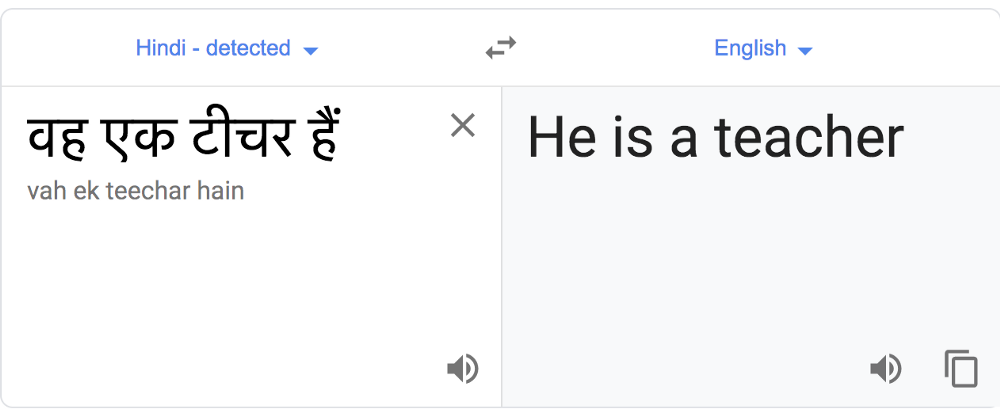

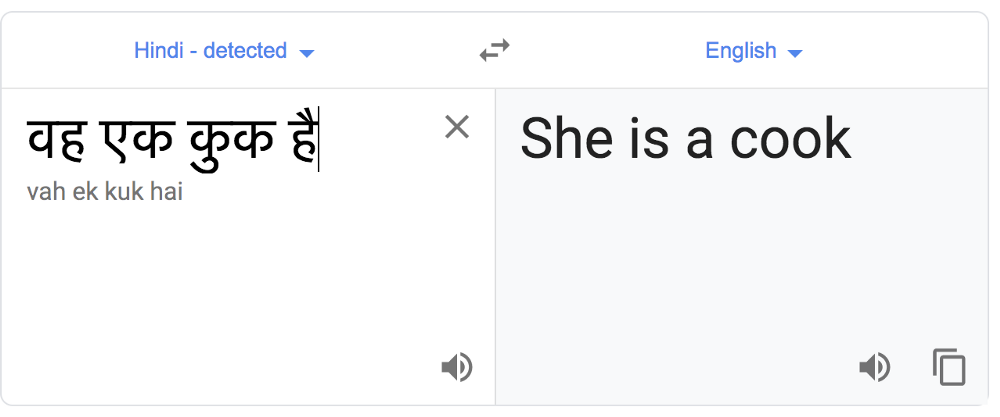

Someone mentioned Google Translator Tool assigns gender to a text translated from the Hebrew language based on the type of profession. Yes, you read it right. It is demonstrating a case of gender bias. Being from a computer science background, working with data every day, it shocked me. I was so inquisitive to try it on my own mother tongue and check the results. Let me share an example:

Example for He

Example for She

In Hindi language for neutral gender, words like “वह” can be interchangeably used for “He/She/It”. It is supposed to be gender neutral as per its usage. But when the same is used on Google Translation app. It assigns a gender to the sentence based on some data.

I certainly don’t know the real rationale behind it. Or is it the machine trying to tell us if someone is a cook, he/she has to be a SHE than a HE and similarly for a “teacher”?

Being a women engineer in tech, these aspects never occur to me. These conversations made me realize that we still live in an unconsciously biased world and we have succumbed to it. The nature displayed by a human is now getting propagated to machine intelligence. Then how can we claim that machines are going to be better than human? It is still an area of deep research. As we think of quality, security and so many other things while writing a single line of code. Will we start to think about bias as an important design principle criteria?

This is as interesting and thought-provoking as it gets. Alexa, Siri sounds like female names, so is it true that we are so comfortable in ordering women over men? I believe Google then did a good job of naming their device as “Google home” and their trigger as “Ok/Hey Google”. :) Well, this is something to introspect.

Give it a thought, what if AI driven recommendation starts to be based on name or gender or ethnicity instead of skills. Won’t it be scary? This requires awareness of one and all so that we don’t implement our algorithms to be so complicit to these norms. AI systems are trained on data generated by society. We can’t expect AI systems to be better unless we are explicitly designing it to be. It would come to a point where our unconscious bias is inherited by these algorithms. It will become a reflection of the society which is not always fair for everyone.

I have read about many top companies working on the ethics of AI, using sophisticated algorithms to counteract biases. There are startups like OpenAI working towards AI solution to benefit humanity as a whole. I still believe it is everyone’s social responsibility to be aware of these biases and make better systems. It is important to raise the right questions to make the solutions better and fair for everyone.